Artificial Intelligence (AI) agents are increasingly integrated into systems that play critical roles in our daily lives, from healthcare diagnostics to autonomous vehicles, and even in national defense systems. With their growing influence comes a heightened necessity to ensure that these agents operate safely and securely. A single flaw can lead to catastrophic consequences, which is why designing trustworthy AI systems is a top priority for researchers, developers, and policymakers around the world.

Understanding Safety and Security in AI

While often used interchangeably, the concepts of safety and security in AI refer to different but complementary aspects:

- Safety refers to the AI agent’s ability to operate without causing unintended harm, even in unforeseen situations.

- Security focuses on protecting AI systems from malicious attacks, data breaches, or manipulation.

Ensuring both safety and security requires a combination of technical design, regulatory oversight, and continuous monitoring.

Key Strategies to Ensure Safety

To ensure safe behavior, AI agents are designed and tested using a variety of well-established methodologies:

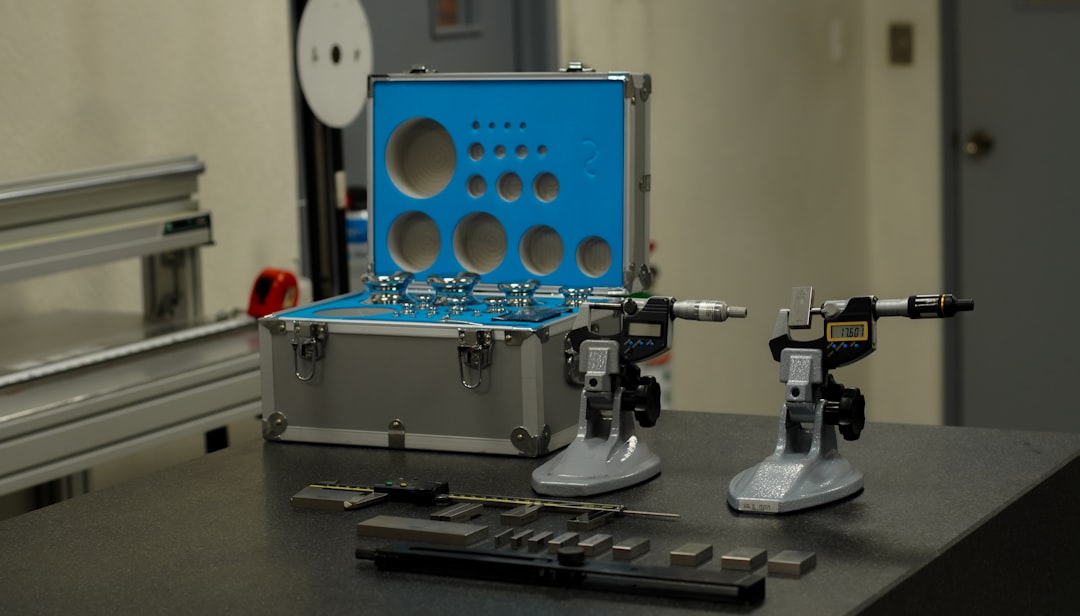

- Rigorous Testing and Validation: Just like aircraft systems, AI solutions must undergo extensive testing in controlled environments to validate that they behave as expected under both normal and exceptional circumstances.

- Formal Verification: Mathematical techniques are used to prove that certain critical properties always hold true. This is especially important in high-risk applications like medical diagnosis or autonomous driving.

- Fail-Safe Mechanisms: AI systems are often equipped with fallback protocols which ensure that, in the event of an error or unexpected behavior, the system reverts to a safe mode of operation.

Security Considerations in AI Agents

As AI systems become more connected to the internet and other infrastructure, they also become more susceptible to various forms of cyberattack. Developers implement layers of safeguards to prevent vulnerabilities and address real-world threats:

- Robust Encryption: Any data processed or stored by AI systems must be encrypted to ensure confidentiality and integrity.

- Regular Security Audits: Periodic reviews by independent experts can uncover hidden vulnerabilities or misconfigurations.

- Adversarial Training: AI models are exposed during training to deliberately manipulated inputs—known as adversarial examples—so they learn not to be fooled by them in real-world applications.

- Access Controls: Role-based access ensures that only authorized personnel can control or modify AI systems, limiting the potential impact of internal threats.

Ethical and Legal Frameworks

In addition to technical measures, safety and security are reinforced by legal and ethical constraints:

- Comprehensive AI Regulations: Governments and international bodies are working to establish frameworks that set boundaries for AI use, ensuring transparency, fairness, and accountability.

- Ethical AI Guidelines: Organizations like the IEEE and OECD have published guidelines that help developers align AI behavior with societal values and ethical norms.

A trustworthy AI agent must not only comply with laws but also act responsibly, especially in scenarios involving privacy, bias, and discrimination.

Continuous Monitoring and Improvement

AI safety cannot be a one-time effort. These systems evolve, and so must the mechanisms that govern them:

- Real-Time Monitoring: AI systems can be paired with monitoring tools that detect anomalies in behavior or performance, triggering alerts or automatic shutdowns.

- Feedback and Iteration: Safe AI design is an ongoing process. User feedback, incident tracking, and performance data are used to continually refine AI algorithms.

- Red Teaming: Dedicated security experts simulate attacks on AI systems to identify weaknesses and reinforce defense strategies.

A Shared Responsibility

Ensuring the safety and security of AI agents is not solely the responsibility of developers or regulators. It is a shared mission that involves:

- Developers, who build and test AI with safety-first principles.

- Organizations, which implement strong internal policies and ethical use guidelines.

- Governments, crafting laws to govern design and deployment.

- Civil society, holding institutions accountable and voicing concerns.

As AI continues to advance, these collaborative efforts are vital to building systems that are not only intelligent but also trustworthy and secure.