The rapid rise of generative artificial intelligence has transformed how digital content is created, published, and consumed. From blog posts and product descriptions to news summaries and landing pages, AI tools are increasingly embedded into editorial workflows. As this shift accelerates, policymakers, search engines, and industry stakeholders are debating a controversial proposal: requiring clear labels on sections of content generated by AI. While transparency sounds straightforward in theory, the practical, legal, and strategic implications are anything but simple—especially when it comes to search engine optimization (SEO) compliance.

TLDR: Proposals to label AI‑generated sections of content aim to increase transparency but raise complex concerns around enforcement, fairness, and SEO impact. Critics argue that labeling could stigmatize high‑quality AI‑assisted content and create compliance confusion. Supporters believe disclosures build trust and prevent misinformation. For SEO professionals, mandatory labeling could affect rankings, click‑through rates, and content strategy in unpredictable ways.

The Push for Transparency in AI Content

The proposal to label AI‑generated content stems from broader concerns about misinformation, authenticity, and user trust. As generative AI becomes more sophisticated, distinguishing between human‑written and machine‑generated content is increasingly difficult. Regulators and advocacy groups argue that readers deserve to know when they are consuming content produced—either fully or partially—by automated systems.

Supporters of labeling requirements often frame the issue around three core principles:

- Transparency: Users should understand how content was created.

- Accountability: Publishers must remain responsible for automated outputs.

- Trust: Clear disclosure may reduce suspicion and misinformation.

At first glance, these goals seem reasonable. After all, industries such as advertising and finance already operate under strict disclosure rules. However, when applied to AI‑assisted content, the situation becomes far more complex.

Why the Proposal Is So Controversial

The controversy lies not in the concept of transparency itself, but in how such labeling would be implemented and what consequences it could produce.

1. Defining “AI‑Generated” Is Complicated

Modern content creation rarely involves a purely human or purely AI process. A writer may use AI to draft an outline, rephrase paragraphs, suggest headlines, or optimize keywords. If a post is 20% AI-assisted, does it require labeling? What about 50%? Would editing AI text eliminate the need for disclosure?

The boundary between AI-generated and AI-assisted content is blurred. Without a precise definition, enforcement becomes inconsistent and potentially unfair.

2. Stigmatization of AI Content

Another major concern is stigma. If readers begin associating AI labels with low quality, publishers may avoid transparent practices simply to preserve credibility—even if AI tools were used responsibly.

This creates a paradox: labeling designed to enhance trust could inadvertently reduce it.

Image not found in postmeta3. Uneven Competitive Impact

Large enterprises often have legal and compliance teams capable of navigating new regulations. Smaller publishers, bloggers, and startups may struggle to interpret or implement labeling requirements correctly. In an already competitive SEO landscape, additional compliance burdens could widen the gap between major brands and independent creators.

4. Enforcement and Verification Challenges

Unlike sponsored content disclosures, which are relatively straightforward, verifying whether content was AI-generated is technically challenging. AI detection tools remain imperfect and frequently produce false positives and negatives. Regulators might rely on self-reporting, but that introduces risks of inconsistent adherence.

Potential Impact on SEO Compliance

Search engines such as Google have repeatedly emphasized that they prioritize quality, helpfulness, and expertise over the method of content creation. In principle, AI content is not penalized solely because it was AI-generated. However, mandatory labeling regulations could introduce new SEO dynamics.

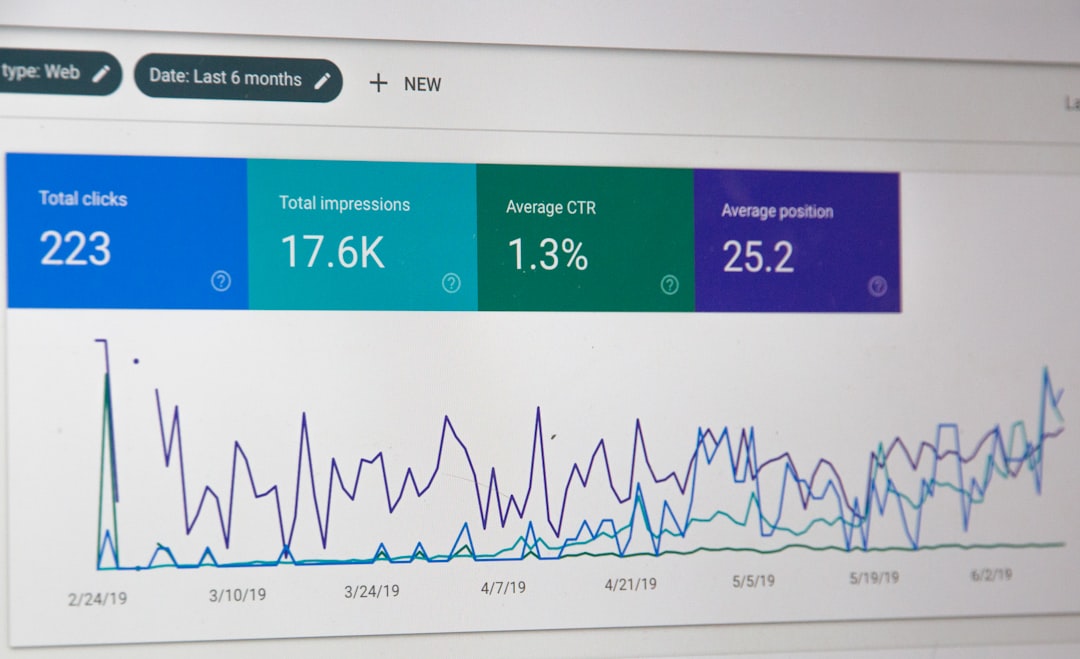

1. Click‑Through Rates (CTR)

If search results or on-page content prominently display “AI-generated” disclaimers, user perception could shift. Some users may avoid AI-labeled results, leading to reduced click‑through rates. Lower CTR can indirectly influence search performance over time.

On the other hand, certain audiences may become indifferent—or even appreciative—of AI transparency, mitigating negative effects.

2. E‑E‑A‑T Signals

Google’s framework for evaluating content emphasizes Experience, Expertise, Authoritativeness, and Trustworthiness (E‑E‑A‑T). If labeling AI usage undermines perceived experience or expertise, pages could struggle to maintain strong credibility signals.

Consider industries such as healthcare, finance, or law. If a medical article discloses that substantial portions were AI‑generated, readers might question the reliability of the information—even if a qualified professional reviewed it.

3. Algorithm Adjustments

It remains unclear whether search engines would incorporate AI‑label metadata into ranking signals. If structured markup were introduced to identify AI-generated sections, algorithms could theoretically use that data in evaluation.

This raises critical questions:

- Would unlabeled AI content be penalized?

- Would labeled content be deprioritized?

- Could excessive automation trigger quality filters?

The uncertainty alone may reshape SEO strategies, pushing businesses toward hybrid models that emphasize stronger human oversight.

4. Content Audits and Documentation

SEO compliance could increasingly require internal documentation showing how AI tools were used. Organizations might need to maintain:

- Editorial review logs

- Human fact‑checking records

- Workflow transparency reports

While such measures may elevate content standards, they also increase operational costs.

The Publisher’s Dilemma

For publishers, the debate creates a strategic balancing act. On one side lies efficiency: AI dramatically reduces production time and costs. On the other lies compliance risk: unclear regulations may expose organizations to penalties or reputational harm.

Many publishers are already adopting internal policies such as:

- Human-in-the-loop editing: Every AI draft undergoes expert review.

- Selective disclosure: Transparency statements about AI assistance without labeling every paragraph.

- Content segmentation: Using AI for data summaries while reserving human authorship for analysis and opinion.

These hybrid approaches attempt to strike a balance between innovation and responsibility.

Arguments in Favor of Labeling

Despite the controversy, labeling proponents present compelling arguments.

Improved media literacy: Clear disclosures may help users better understand how modern content ecosystems function.

Reduced misinformation risk: In politically sensitive or crisis-related contexts, knowing content was automated may encourage readers to cross-check information.

Market differentiation: Human-only content creators might leverage disclosures as a competitive advantage.

In a digital world saturated with information, transparency could serve as a stabilizing factor.

Arguments Against Mandatory Labeling

Critics caution that mandatory labeling could produce unintended consequences, including:

- Innovation slowdown: Fear of regulatory consequences could discourage experimentation.

- Ambiguity in application: Partial AI assistance complicates clear classification.

- Selective enforcement: Smaller entities may face disproportionate scrutiny.

- Overemphasis on method instead of quality: Good content should be judged on merit, not origin.

Many argue that a quality-based evaluation framework—rather than a production-based one—would better align with SEO best practices.

What It Could Mean for the Future of SEO

If labeling requirements become widespread, SEO strategies may evolve in several important ways.

Greater emphasis on author branding: Highlighting human credentials, bios, and subject‑matter expertise may counterbalance AI disclosures.

Stronger editorial standards: Demonstrable review processes could serve as differentiators in competitive niches.

Structured transparency: Standardized metadata fields for AI involvement might emerge, similar to schema markup.

Shift toward value-driven content: Original insights, case studies, and firsthand experiences—areas where human input is strongest—may gain increased importance.

Rather than eliminating AI from SEO workflows, labeling policies could push the industry toward more responsible and strategic usage.

A Middle Ground: Contextual Disclosure

Some experts advocate for contextual disclosure rather than blanket labels. For example:

- Disclosing AI usage in site-wide editorial policies

- Labeling fully automated content but not lightly assisted material

- Focusing on high-risk sectors such as health and finance

This flexible approach may preserve transparency while minimizing negative SEO side effects.

Conclusion

The proposal to label sections of AI‑generated content highlights a deeper tension between technological innovation and regulatory oversight. While transparency fosters trust, rigid labeling rules risk oversimplifying how modern content is created. For SEO professionals, the stakes are significant: disclosure policies could influence user behavior, ranking signals, and competitive dynamics.

Ultimately, the debate may shift from whether AI is used to how responsibly it is integrated. If search engines continue prioritizing helpful, authoritative, and trustworthy content, then thoughtful human oversight—regardless of tool usage—will remain the most sustainable path forward. Transparency will likely become a component of SEO compliance, but quality will still reign supreme.